Build a Spark Data Source API (real streaming)

Implement `SimpleDataSourceStreamReader`, define schema and offsets, and expose a custom format to read streaming events with control and observability, without external connectors.

Implement `SimpleDataSourceStreamReader`, define schema and offsets, and expose a custom format to read streaming events with control and observability, without external connectors.

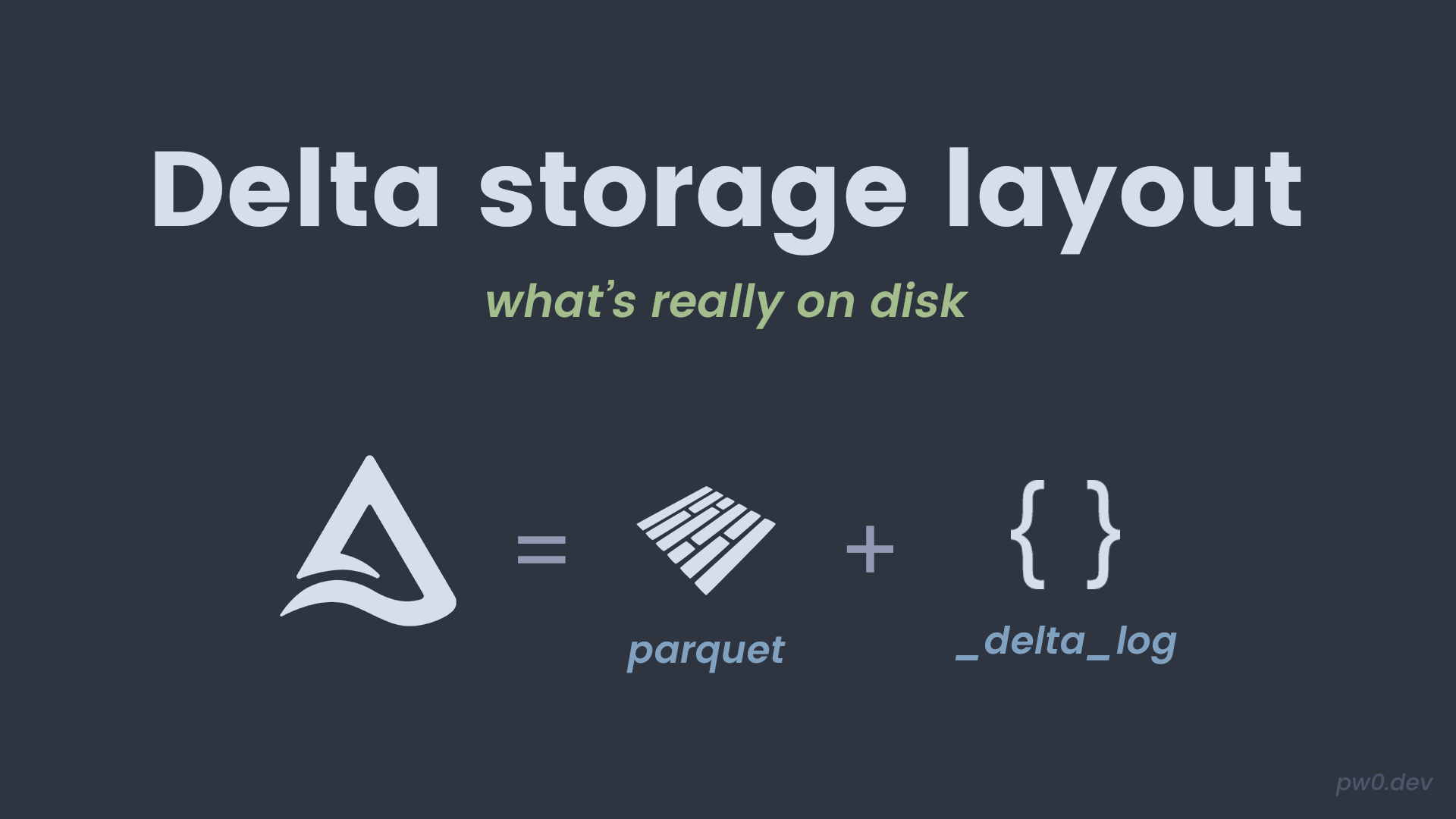

Explore the on‑disk layout, commits, and checkpoints, and see why it matters for performance, maintenance, and troubleshooting in production.

End‑to‑end walkthrough: create a Delta table, insert data, read, filter, and validate results with expected outputs. The minimal base before any optimization work.

Learn `versionAsOf` and `timestampAsOf`, validate changes, and understand when time travel is best for auditing, recovery, and regression analysis in Delta Lake.

Detect skewed joins in Spark and apply salting to spread hot keys. You will compare before/after stage and shuffle times, with a synthetic repro and a real dataset plus downloads at the end.

Connect local Kafka to Spark Structured Streaming, define a schema, and run a continuous read. Includes simple metrics and validations to confirm the stream is working.

Kafka CLI first steps: create topics, produce events, and consume them from console in a reproducible local environment. Perfect for practice without cloud dependencies.

Explains offsets, partitions, and rebalances with a runnable example that shows how consumption is split across consumers and what happens when scaling or failures occur.

Practical guide with clear examples and expected outputs to master core DataFrame transformations. Includes readable chaining patterns and quick validations.

Hands‑on guide to bring up the local stack, check UI/health, and run a first job. Includes minimal checks to confirm Master/Workers are healthy and ready for the rest of the series.