PySpark basics for everyday work

Practical guide with clear examples and expected outputs to master core DataFrame transformations. Includes readable chaining patterns and quick validations.

Practical guide with clear examples and expected outputs to master core DataFrame transformations. Includes readable chaining patterns and quick validations.

Learn versionAsOf and timestampAsOf, validate changes, and understand when time travel is best for auditing, recovery, and regression analysis in Delta Lake.

Introduce spark.sql.shuffle.partitions, repartition, and coalesce with a reproducible example to see impact on stages, time, and shuffle size.

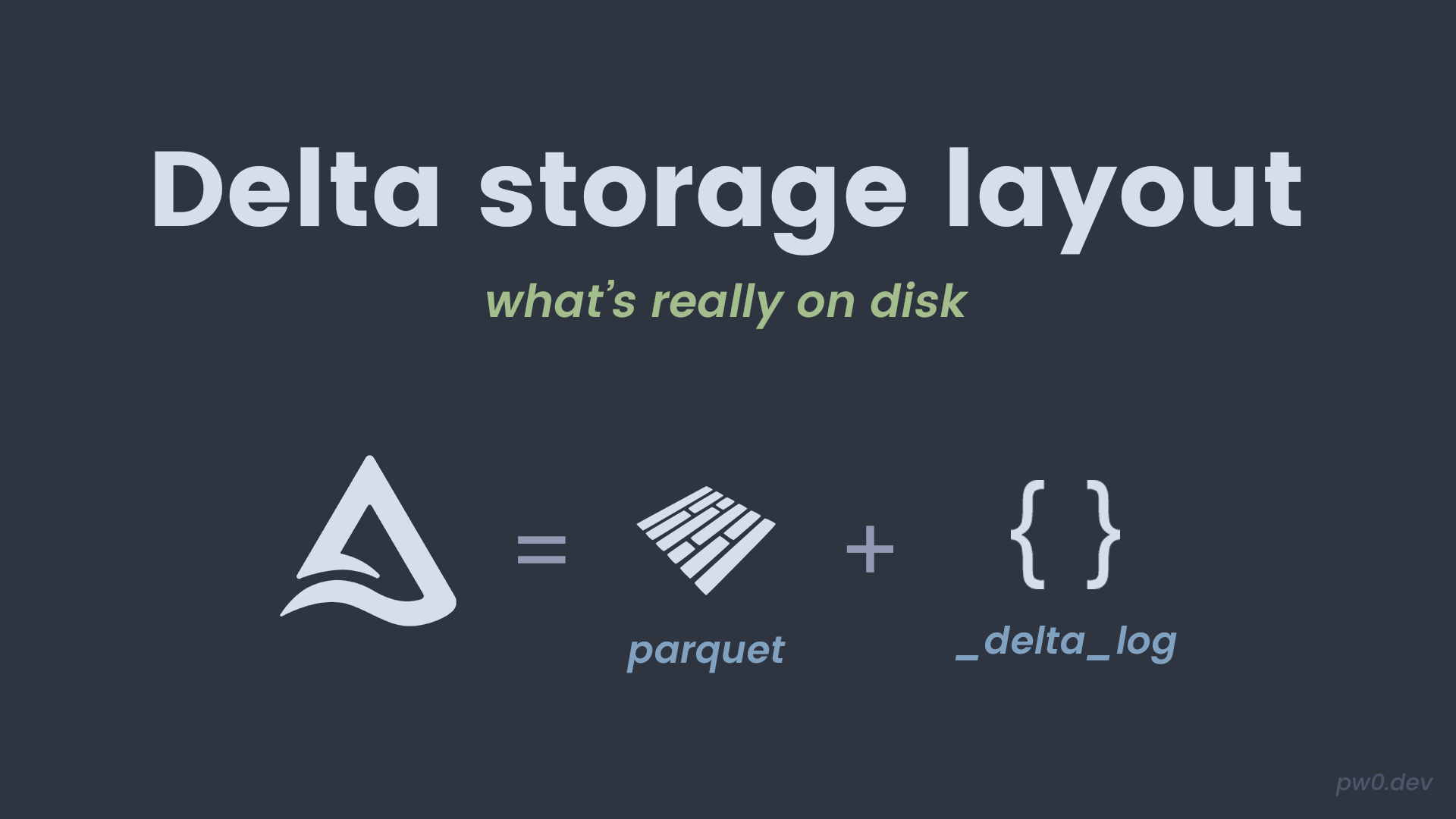

Explore the on‑disk layout, commits, and checkpoints, and see why it matters for performance, maintenance, and troubleshooting in production.

End‑to‑end walkthrough: create a Delta table, insert data, read, filter, and validate results with expected outputs. The minimal base before any optimization work.