Arregla joins con skew en Spark usando salting

Detecta joins con skew en Spark y aplica salting para repartir las llaves “hot”. Verás el antes/después con tiempos de stage y shuffle, una repro sintética y un dataset real con descargas al final.

Detecta joins con skew en Spark y aplica salting para repartir las llaves “hot”. Verás el antes/después con tiempos de stage y shuffle, una repro sintética y un dataset real con descargas al final.

Implementa `SimpleDataSourceStreamReader`, define schema y offsets, y expone un formato propio para leer eventos en streaming con control y observabilidad, sin depender de connectors externos.

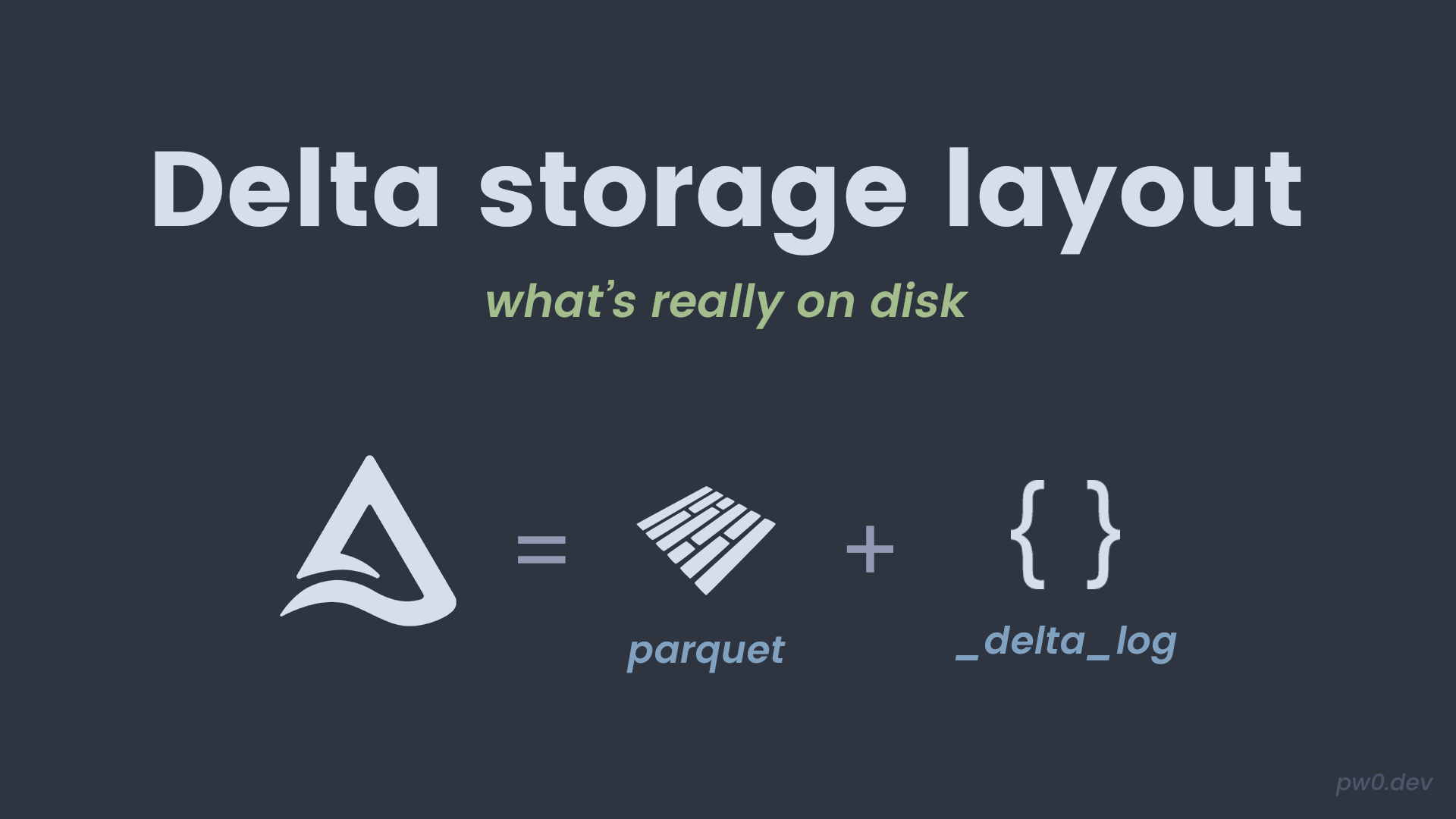

Explora el layout en disco, commits y checkpoints, y entiende por qué esto importa para performance, mantenimiento y troubleshooting en producción.

Recorrido end‑to‑end: crear tabla Delta, insertar datos, leer, filtrar y validar resultados con salidas esperadas. Base mínima para entender Delta antes de optimizar.

Aprende `versionAsOf` y `timestampAsOf`, valida cambios y entiende cuándo usar time travel para auditoría, recovery y análisis de regresiones en Delta Lake.

Conecta Kafka local con Spark Structured Streaming, define un esquema y ejecuta una lectura continua. Verás métricas simples y validaciones para confirmar que el stream funciona.

Introduce `spark.sql.shuffle.partitions`, repartition y coalesce con un ejemplo reproducible para ver impacto en tiempos, stages y tamaño de shuffle.

Guía práctica con ejemplos claros y salidas esperadas para dominar transformaciones básicas en DataFrames. Incluye patrones de chaining legibles y validaciones rápidas.

Guía práctica para levantar el stack local, comprobar UI/health y correr un primer job. Incluye checks mínimos para confirmar Spark Master/Workers y que tu entorno quede listo para los posts.